How to protect yourself against deepfake scams in video calls

Ongoing public concerns over real-time video scams has been the spur to gain global attention as we witness new major incidents taking place increasingly more. Take a Hong Kong MNC recently falling prey to a scammer in a colossal $25.6 million heist — the deepfake technology has already evolved enough to bring on a whole new brand of fraud.

What remains is a call to action. Are there ways to protect yourself and your organization against con men posing as your boss, your business partner, or even your own mother? Let’s find out!

First things first, let us start with the definition.

What is a deepfake?

In case the definition of a deepfake is still unclear to some, a deepfake is content generated using deep learning techniques that is intended to look real, but is in fact fabricated. Artificial intelligence (AI) used to generate deepfakes typically employs generative models, for example, Generative Adversarial Networks (GANs) or auto-encoders.

Deepfakes are used not only in video content, but also in audio recordings and images. The purpose of a deepfake is often to depict an individual or a group saying or doing something that they never did in reality. To produce content that appears convincing, the AI must use large datasets in its training. It allows the model to recognize and reproduce natural patterns present in content it is designed to mimic.

While deepfake technology is a breakthrough with great potential in the film industry and game development, as well as a rising social media trend, it also opens dangerous opportunities for illegal use. The examples are numerous and include identity theft, evidence forging, disinformation, slander and biometric security bypass. In all cases, fraudsters typically leverage the depicted person’s authority over the targeted individuals or personal connection to them, depending on the setting.

Secure your calls with Nextcloud Hub

Watch back our webinar on secure conferencing in Talk. learn how to set up reliable access control, prevent leaks and track back all suspicious activity.

WatchWhere can you encounter a deepfake?

Deepfakes are used to produce video, audio or image content, as a recorded media or a real-time stream. It can be a YouTube video, a ‘leaked’ recording in a social post, a phone call or a video conference – the opportunities are practically unlimited.

Depending on the purpose, the format is picked accordingly. For example, political disinformation works best where mass engagement is possible, meaning that spreading it publicly via social media is the best tactic. Whereas seeking a private gain from a company or or individual requires a more intimate setting and often a personal conversation.

When it comes to threats to your personal life, finance or security, we can narrow down the most dangerous deepfake scenarios to encounters with people you care about, trust , or report to. This can be a family member, a friend, or an authority figure at work such as your boss or a company executive.

The setting will most likely be private: whether over a phone call or a video meeting. Personal meetings are much easier to execute and give the faker much more control over the situation. The conversation, whatever the background is, will lead you to an action under a sense of urgency or fear – most likely to transfer a sum of money. The tactic is to deceive your logic and common sense using fear, compassion or even ambition.

As generative AI development drives a huge interest and investment, we are entering a dangerous zone: real-time video, the most sophisticated and convincing deepfake use case yet, still has a very little awareness.

Deepfakes in real-time video

Real-time video deepfakes generate manipulated video content in real-time for immediate application during live streams and video calls. Voice cloning and face swapping are the most frequently used techniques to compose a complete faked environment.

Face swapping

Face swapping is a common application of deepfakes, allowing the software to replace facial features of a target person with fake features, most often those of another person. With facial landmark detection and manipulation techniques, the blending appears seamless and hard to spot when caught unaware.

Voice cloning

In addition to looking convincing, a faker also needs to sound convincing. For this part, voice cloning is used. In voice cloning, the AI replicates the voice of the individual. A significant amount of high-quality audio data is required to train a voice cloning model, usually obtained from recordings of the target person speaking in various contexts and using different intonations.

Curiosity time: how does a deepfake setup actually work?

Deepfake technology is capable of impersonating real-life individuals and doing it in a real-time setting, making the result even more convincing (and terrifying!). But how does the software work in a way that we encounter deepfakes using familiar meeting platforms?

Deepfake generation software can be integrated with streaming platforms and video conferencing tools in many ways:

- It could function as a separate application that captures the video feed, processes it in real-time, and then sends the manipulated feed to the video conferencing software.

- Alternatively, it might be integrated directly into the video conferencing software as an optional feature or plugin.

- Another way, even more sophisticated and hard to detect is camera input, namely a virtual camera. Virtual camera intercepts the video feed from the physical camera of the faker. It then outputs the manipulated feed to the video conferencing software. The faker just picks the virtual camera as their camera input and voilà! (not funny, we know).

How to protect yourself against deepfakes?

Finally, to the most important part. How do you protect yourself against a deepfake, or at least get prepared to spot a fake boss making a sketchy request over video?

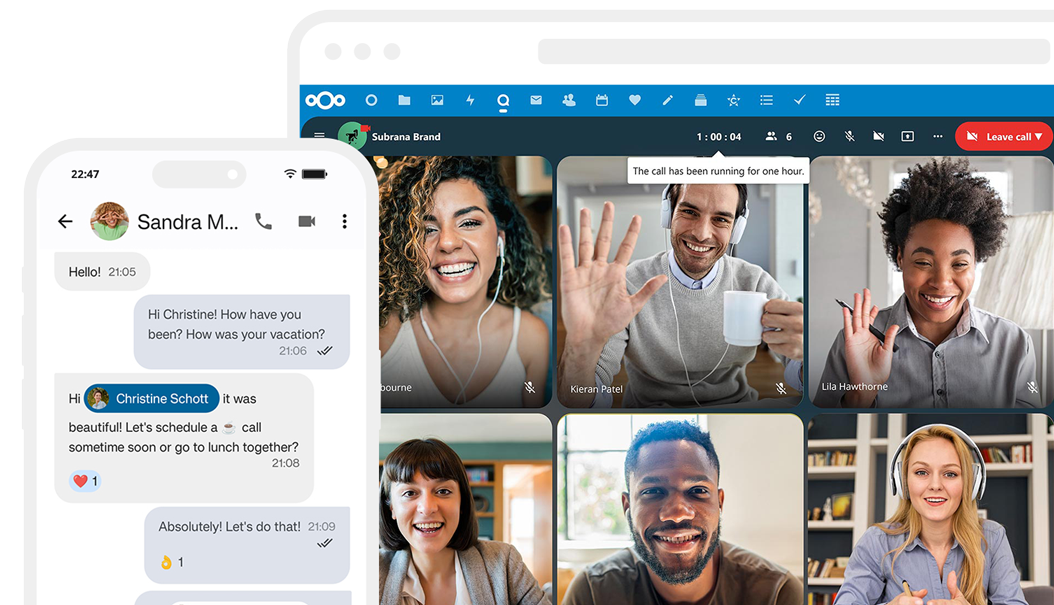

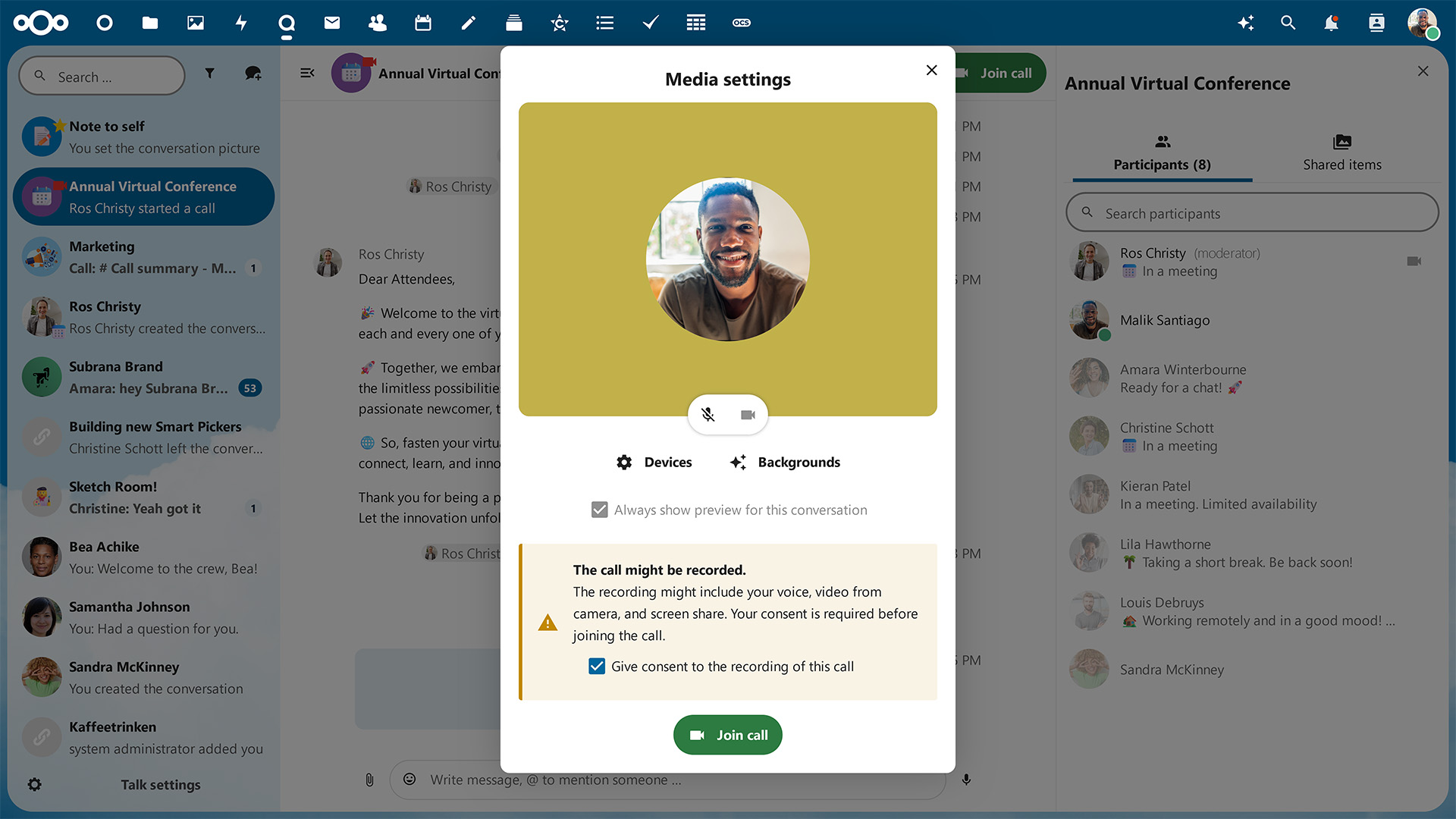

Privacy-first videoconferencing software is a key to safe meetings. Meet Nextcloud Talk, a powerful chatting and meeting platform that lets you regain control.

Watch out for red flags

AI face swapping technology maybe advanced, but it’s not perfect. There are red flags you can spot, or at least learn to look out for when something seems off or unnatural:

- Unrealistic facial expressions or movements, including unnatural eye movements, inappropriate blinking, and/or weird lip sync.

- Inconsistencies in lighting and shadows that don’t match the surroundings.

- Unnatural head or body movements, as well as visible blurring or pixelation around the face or neck.

- Inconsistent quality in audio and video and mismatch between the picture and the sound.

Suspicious? Be proactive

There are methods to help you fish out the red flags that generally won’t make the conversation awkward if the person is in fact real.

First, there’s nothing more natural than a casual conversation. Engage in small talk: ask about their day, routine, questions about people you both know, etc. A complete stranger will struggle to be spontaneous and maintain the same personal connection. It’s also easier to catch one off guard when they lose a sense of control.

You can also use other video conferencing features: ask the person to share their screen and show you something related to your common tasks. This will be very difficult to replicate without access.

Finally, once they make a suspicious request, you have more freedom to be alert openly — politely ask them to confirm their identity by providing some exclusive information or send you a confirmation message via a different channel.

Set up a passphrase

One more way to ensure confidence when it comes to sensitive topics is setting up a password or passphrase. This is an easy way to confirm the identity of the people you know, both at work and between family members, and it is equally effective via voice, video and text communication.

Verify identity outside of the meeting

If a faker poses as a person you know well, chances are you have more than one communication channel to reach out with. Use email, a messenger or a personal phone number to contact them and raise a question — the reason is valid.

Don’t let them harvest your data

To replicate and manipulate a person’s voice or image, AI needs a massive amount of data. This data is often gathered beforehand, during online calls and meetings. Features like Recording Consent in Nextcloud Talk may help you protect yourself and others from such a data haul.

Use company software

It’s unlikely for your real boss to set up a meeting via a platform you never use for work. And if they do, they must have a good reason! Don’t be afraid to stand up to suspicious activity.

Using company software means better control over the data and compliance with privacy regulations. Even better — if you run it on premise! Should an incident happen, the company IT team can run an audit to retrieve the relevant data and investigate.

Ensure secure access to your videoconferencing platform with settings like 2FA, strong passwords, data encryption, activity monitoring, and login restrictions. This applies to your personal settings and administrative controls.

Nextcloud Talk: video and chat with privacy in mind

Using a privacy-oriented, unified workspace with admin control in all apps makes sure your security protocols are in place to detect and prevent breaches. Nextcloud Hub provides a user friendly videoconferencing platform that keeps users happy to stay within company IT.

How Nextcloud Talk protects your data:

- AI-powered suspicious login detection

- Multi-layered encryption with end-to-end encrypted communication

- Brute-force protection

- Fully on premise, 100% open source

Nextcloud is an open-source project backed by a strong community with proactive approach to vulnerability research and patching. It is designed to let you stay compliant with GDPR, CCPA, and the upcoming EU ePrivacy Regulation.